It’s been a few years since I made my post about getting Plex GPU transcoding to work in Docker inside an LXC container running on Proxmox. It’s gotten quite a few updates over the years based on feedbacks and own observations, which fixes different problems and optimized several aspects.

I recently upgraded my Proxmox-host, and also wanted to update my NVIDIA-drivers. After the upgrade everything seemed to be fine, but Plex transcoding would not work. All my usual previous fixes did not solve the problem. I decided to “start from scratch”, and see if I could a) get it to work, b) simplify the setup, and c) improve where possible.

My previous guide might still work for you, so feel free to use that one if that suits your setup better. Note, however, that future updates/improvements will probably only be done on this new version of the guide.

As last time, I’ll assume you’ve got Proxmox and LXC set up, ready to go. When I originally wrote this guide, the Proxmox version was 8.4.1 and LXC was running Debian 12.10 Bookworm. Since then, the guide has been updated to use Proxmox version 9.x (Debian 13 Trixie). Keep in mind that my host has secure boot disabled in the BIOS, which is a common source of problems when dealing with this setup. There are ways of getting this setup working when using secure boot, but that’s outside the scope of this guide.

In my example I’ll be running a LXC container named docker1 (ID 101) on my Proxmox host. Everything will be headless (i.e. no X involved). The LXC will be privileged with fuse=1,nesting=1 set as features. I’ll use a Nvidia RTX 2000 Ada as the GPU. All commands will be run as root. Note that there might be other steps that needs to be done if you attempt to run this in a rootless/unprivileged LXC container (see here for more information).

The referenced commands in this guide can for the most part be copy-pasted. Some of the steps are interactive and/or requires you to do small changes on your own.

If you followed my previous v1 guide, it’s recommended to “revert” that setup (i.e. do a cleanup), so that you start with a clean slate. See here for more info.

As a sidenote; NVIDIA drivers now includes two kernel module options; the previous proprietary version, and a new open-source alternative. While the open-source version is considered somewhat stable, it still doesn’t have 100% feature-parity with the proprietary kernel module. For this guide, we’ll use the proprietary version.

Proxmox host

First step is to install the NVIDIA driver and relevant dependencies. It’s based on NVIDIA’s own installation guide for Debian, with notes from the headless-section, with some slight modifications. We also need to add some extra logic to ensure devices are created upon reboot (see here for more info).

# modify the apt repo sources to have "Components: main contrib non-free non-free-firmware"

Types: deb

URIs: http://deb.debian.org/debian/

Suites: trixie trixie-updates trixie-backports

Components: main contrib non-free non-free-firmware

Signed-By: /usr/share/keyrings/debian-archive-keyring.gpg

Types: deb

URIs: http://deb.debian.org/debian-security/

Suites: trixie-security

Components: main contrib non-free non-free-firmware

Signed-By: /usr/share/keyrings/debian-archive-keyring.gpg

# update + install headers

apt update

apt install proxmox-headers-$(uname -r)

# download nvidia keyring and install it

wget https://developer.download.nvidia.com/compute/cuda/repos/debian13/x86_64/cuda-keyring_1.1-1_all.deb

dpkg -i cuda-keyring_1.1-1_all.deb

apt update

# install nvidia drivers

# these are the compute-only (headless) versions of the drivers

# this makes sure we don't install any unnecessary packages (X drivers, etc)

apt install nvidia-driver-cuda nvidia-kernel-dkms

# make sure that all nvidia devices are loaded upon boot

cat >/etc/systemd/system/nvidia-pre-lxc-init.service <<'EOF'

[Unit]

Description=Initialize NVIDIA devices early (before Proxmox guests)

After=systemd-modules-load.service

Before=pve-guests.service

Wants=pve-guests.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStartPre=-/sbin/modprobe nvidia

ExecStartPre=-/sbin/modprobe nvidia_uvm

ExecStart=-/usr/bin/nvidia-smi -L

ExecStart=-/usr/bin/nvidia-modprobe -u -c=0

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable nvidia-pre-lxc-init.service

# rebootAfter the reboot, the driver should be installed and working.

root@foobar:~# nvidia-smi

Sat Jan 10 23:01:34 2026

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 590.48.01 Driver Version: 590.48.01 CUDA Version: 13.1 |

+-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA RTX 2000 Ada Gene... On | 00000000:5E:00.0 Off | Off |

| 30% 37C P8 4W / 70W | 1MiB / 16380MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

root@foobar:~# systemctl status nvidia-persistenced.service

● nvidia-persistenced.service - NVIDIA Persistence Daemon

Loaded: loaded (/usr/lib/systemd/system/nvidia-persistenced.service; enabled; preset: enabled)

Active: active (running) since Sat 2026-01-10 23:01:14 CET; 31s ago

Invocation: 7e9cdd227ea440b9a1b33b806bb9b9d9

Process: 631444 ExecStart=/usr/bin/nvidia-persistenced --user nvidia-persistenced (code=exited, status=0/SUCCESS)

Main PID: 631448 (nvidia-persiste)

Tasks: 1 (limit: 230189)

Memory: 772K (peak: 2.2M)

CPU: 1.912s

CGroup: /system.slice/nvidia-persistenced.service

└─631448 /usr/bin/nvidia-persistenced --user nvidia-persistenced

Jan 10 23:01:13 foobar systemd[1]: Starting nvidia-persistenced.service - NVIDIA Persistence Daemon...

Jan 10 23:01:13 foobar nvidia-persistenced[631448]: Started (631448)

Jan 10 23:01:14 foobar systemd[1]: Started nvidia-persistenced.service - NVIDIA Persistence Daemon.

root@foobar:~# ls -alh /dev/nvidia* /dev/dri

crw-rw-rw- 1 root root 195, 0 Jan 10 20:49 /dev/nvidia0

crw-rw-rw- 1 root root 195, 255 Jan 10 20:49 /dev/nvidiactl

crw-rw-rw- 1 root root 195, 254 Jan 10 23:01 /dev/nvidia-modeset

crw-rw-rw- 1 root root 509, 0 Jan 10 20:49 /dev/nvidia-uvm

crw-rw-rw- 1 root root 509, 1 Jan 10 20:49 /dev/nvidia-uvm-tools

/dev/dri:

total 0

drwxr-xr-x 3 root root 80 Jan 10 20:31 .

drwxr-xr-x 22 root root 7.1K Jan 10 23:01 ..

drwxr-xr-x 2 root root 60 Jan 10 20:31 by-path

crw-rw---- 1 root video 226, 0 Jan 10 20:31 card0

/dev/nvidia-caps:

total 0

drwxr-xr-x 2 root root 80 Jan 10 20:49 .

drwxr-xr-x 22 root root 7.1K Jan 10 23:01 ..

cr-------- 1 root root 234, 1 Jan 10 20:49 nvidia-cap1

cr--r--r-- 1 root root 234, 2 Jan 10 20:49 nvidia-cap2If the correct GPU shows from nvidia-smi, the persistence service runs fine, and you have at least five files under /dev/nvidia* are available, we’re ready to proceed to the LXC container.

The number of files depend on your setup; if you don’t have any /dev/nvidia-caps folder, you should be fine by adding only the five files listed above. If you also happen to have the /dev/nvidia-caps folder, you should add the two (or more) files within that as well. See here for more info.

Note that the files under /dev/dri is strictly not needed for transcoding, but would be needed for other things like rendering or display applications like VirtualGL. If you’re using Intel or AMD GPUs, this would also be needed. We’re adding them in this guide for completeness.

LXC container

We need to add relevant LXC configuration to our container. Shut down the LXC container, and make the following changes to the LXC configuration file. You may need to change/add some of these device-entries depending on your hardware (card0 might be internal VGA card in server, while card1 is your NVIDIA GPU, or similar).

# edit /etc/pve/lxc/101.conf and add the following

dev0: /dev/nvidia0

dev1: /dev/nvidiactl

dev2: /dev/nvidia-modeset

dev3: /dev/nvidia-uvm

dev4: /dev/nvidia-uvm-tools

dev5: /dev/nvidia-caps/nvidia-cap1

dev6: /dev/nvidia-caps/nvidia-cap2

dev7: /dev/dri/card0

dev8: /dev/dri/renderD128

# if you're running in unprivileged mode, you also need to add permissions

# you either add the lines above, or the lines below -- not both

# gid/uid might need to be changed to suit your lxc-setup

dev0: /dev/nvidia0,gid=1000,uid=1000

dev1: /dev/nvidiactl,gid=1000,uid=1000

dev2: /dev/nvidia-modeset,gid=1000,uid=1000

dev3: /dev/nvidia-uvm,gid=1000,uid=1000

dev4: /dev/nvidia-uvm-tools,gid=1000,uid=1000

dev5: /dev/nvidia-caps/nvidia-cap1,gid=1000,uid=1000

dev6: /dev/nvidia-caps/nvidia-cap2,gid=1000,uid=1000

dev7: /dev/dri/card0,gid=1000,uid=1000

dev8: /dev/dri/renderD128,gid=1000,uid=1000We can now turn on the LXC container, and we’ll be ready to install the Nvidia driver inside the LXC container.

# modify the apt repo sources to have "Components: main contrib non-free non-free-firmware"

Types: deb

URIs: http://deb.debian.org/debian/

Suites: trixie trixie-updates trixie-backports

Components: main contrib non-free non-free-firmware

Signed-By: /usr/share/keyrings/debian-archive-keyring.gpg

Types: deb

URIs: http://deb.debian.org/debian-security/

Suites: trixie-security

Components: main contrib non-free non-free-firmware

Signed-By: /usr/share/keyrings/debian-archive-keyring.gpg

# download nvidia keyring and install it

wget https://developer.download.nvidia.com/compute/cuda/repos/debian13/x86_64/cuda-keyring_1.1-1_all.deb

dpkg -i cuda-keyring_1.1-1_all.deb

apt update

# install nvidia drivers

# these are the compute-only (headless) versions of the drivers

# this makes sure we don't install any unnecessary packages (X drivers, etc)

apt install nvidia-driver-cudaThe installation above will install kernel modules and other non-required files. Attempting to remove these, will also remove the driver. We’ll workaround this by doing some small modifications. I have not yet found a way to do this in a different manner (i.e. where only the required files are installed). If you find a way, please let me know.

# disable + mask persistence service

systemctl stop nvidia-persistenced.service

systemctl disable nvidia-persistenced.service

systemctl mask nvidia-persistenced.service

# remove kernel config

echo "" > /etc/modprobe.d/nvidia.conf

echo "" > /etc/modprobe.d/nvidia-modeset.conf

# block kernel modules

echo -e "blacklist nvidia\nblacklist nvidia_drm\nblacklist nvidia_modeset\nblacklist nvidia_uvm" > /etc/modprobe.d/blacklist-nvidia.confAfter a reboot, we should see the files we mounted & that the driver works as expected.

root@docker1:~# nvidia-smi

Sun Jan 11 04:57:11 2026

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 590.48.01 Driver Version: 590.48.01 CUDA Version: 13.1 |

+-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA RTX 2000 Ada Gene... Off | 00000000:5E:00.0 Off | Off |

| 30% 31C P8 4W / 70W | 2MiB / 16380MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

root@docker1:~# ls -alh /dev/nvidia* /dev/dri

crw-rw-rw- 1 root root 195, 0 Jan 11 04:41 /dev/nvidia0

crw-rw-rw- 1 root root 195, 255 Jan 11 04:41 /dev/nvidiactl

crw-rw---- 1 root root 195, 254 Jan 11 04:41 /dev/nvidia-modeset

crw-rw-rw- 1 root root 509, 0 Jan 11 04:44 /dev/nvidia-uvm

/dev/dri:

total 0

drwxr-xr-x 2 root root 80 Jan 11 04:41 .

drwxr-xr-x 9 root root 620 Jan 11 04:44 ..

crw-rw---- 1 root root 226, 1 Jan 11 04:41 card0

crw-rw---- 1 root root 226, 128 Jan 11 04:41 renderD128

/dev/nvidia-caps:

total 0

drwxr-xr-x 2 root root 80 Jan 11 04:44 .

drwxr-xr-x 9 root root 620 Jan 11 04:44 ..

cr-------- 1 root root 234, 1 Jan 11 04:44 nvidia-cap1

cr--r--r-- 1 root root 234, 2 Jan 11 04:44 nvidia-cap2Docker container

Now we can address the Docker configuration/setup. If you didn’t purge/cleanup these aspects from my v1 guide, and you’re “converting” your system from v1 to v2, you should be able to skip to the next section (testing). If you’re doing a clean install, you need to do these next steps as well.

We’ll be using docker-compose, and we’ll also make sure to have the latest version by removing the Debian-provided docker and docker-compose. We’ll also install the Nvidia-provided Docker runtime. Both these are relevant in terms of making the GPU available within Docker.

# remove debian-provided packages

apt remove docker-compose docker docker.io containerd runc

# install docker from official repository

apt update

apt install ca-certificates curl gnupg lsb-release

curl -fsSL https://download.docker.com/linux/debian/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/debian \

$(lsb_release -cs) stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

apt update

apt install docker-ce docker-ce-cli containerd.io

# install docker-compose

curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

# install docker-compose bash completion

curl \

-L https://raw.githubusercontent.com/docker/cli/master/contrib/completion/bash/docker \

-o /etc/bash_completion.d/docker-compose

# install NVIDIA Container Toolkit

apt install -y curl sudo

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg

curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

apt update

apt install nvidia-container-toolkit

# make sure that docker is configured

# this will modify your existing /etc/docker/daemon.json by adding relevant config

nvidia-ctk runtime configure --runtime=docker

# restart systemd + docker (if you don't reload systemd, it might not work)

systemctl daemon-reload

systemctl restart dockerWe should now be able to run Docker containers with GPU support. Let’s test it.

# nvidia/cuda doesn't support the "latest" tag. they also remove old releases,

# so we need to find the latest one. you can either run the oneliner below,

# or you can find the latest "base-ubuntu" tag manually on this page:

# https://hub.docker.com/r/nvidia/cuda/tags

root@docker1:~# latest_tag="`curl -s https://gitlab.com/nvidia/container-images/cuda/raw/master/doc/supported-tags.md | grep -i "base-ubuntu" | head -1 | perl -wple 's/.+\`(.+?)\`.+/$1/'`"

root@docker1:~# echo $latest_tag

12.8.1-base-ubuntu24.04

root@docker1:~# docker run --rm --gpus all nvidia/cuda:${latest_tag} nvidia-smi

Sun Apr 20 06:31:53 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.133.20 Driver Version: 570.133.20 CUDA Version: 12.8 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA RTX A2000 Off | 00000000:82:00.0 Off | Off |

| 30% 29C P8 5W / 70W | 1MiB / 6138MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

root@docker1:~# docker run --rm -it --gpus all --runtime=nvidia linuxserver/ffmpeg -hwaccel nvdec -f lavfi -i testsrc2=duration=300:size=1280x720:rate=90 -c:v hevc_nvenc -qp 18 nvidia-hevc_nvec-90fps-300s.mp4

ffmpeg version 7.1.1 Copyright (c) 2000-2025 the FFmpeg developers

built with gcc 13 (Ubuntu 13.3.0-6ubuntu2~24.04)

configuration: --disable-debug --disable-doc --disable-ffplay --enable-alsa --enable-cuda-llvm --enable-cuvid --enable-ffprobe --enable-gpl --enable-libaom --enable-libass --enable-libdav1d --enable-libfdk_aac --enable-libfontconfig --enable-libfreetype --enable-libfribidi --enable-libharfbuzz --enable-libkvazaar --enable-liblc3 --enable-libmp3lame --enable-libopencore-amrnb --enable-libopencore-amrwb --enable-libopenjpeg --enable-libopus --enable-libplacebo --enable-librav1e --enable-librist --enable-libshaderc --enable-libsrt --enable-libsvtav1 --enable-libtheora --enable-libv4l2 --enable-libvidstab --enable-libvmaf --enable-libvorbis --enable-libvpl --enable-libvpx --enable-libvvenc --enable-libwebp --enable-libx264 --enable-libx265 --enable-libxml2 --enable-libxvid --enable-libzimg --enable-libzmq --enable-nonfree --enable-nvdec --enable-nvenc --enable-opencl --enable-openssl --enable-stripping --enable-vaapi --enable-vdpau --enable-version3 --enable-vulkan

libavutil 59. 39.100 / 59. 39.100

libavcodec 61. 19.101 / 61. 19.101

libavformat 61. 7.100 / 61. 7.100

libavdevice 61. 3.100 / 61. 3.100

libavfilter 10. 4.100 / 10. 4.100

libswscale 8. 3.100 / 8. 3.100

libswresample 5. 3.100 / 5. 3.100

libpostproc 58. 3.100 / 58. 3.100

Input #0, lavfi, from 'testsrc2=duration=300:size=1280x720:rate=90':

Duration: N/A, start: 0.000000, bitrate: N/A

Stream #0:0: Video: wrapped_avframe, yuv420p, 1280x720 [SAR 1:1 DAR 16:9], 90 fps, 90 tbr, 90 tbn

Stream mapping:

Stream #0:0 -> #0:0 (wrapped_avframe (native) -> hevc (hevc_nvenc))

Press [q] to stop, [?] for help

Output #0, mp4, to 'nvidia-hevc_nvec-90fps-300s.mp4':

Metadata:

encoder : Lavf61.7.100

Stream #0:0: Video: hevc (Main) (hev1 / 0x31766568), yuv420p(tv, progressive), 1280x720 [SAR 1:1 DAR 16:9], q=2-31, 2000 kb/s, 90 fps, 11520 tbn

Metadata:

encoder : Lavc61.19.101 hevc_nvenc

Side data:

cpb: bitrate max/min/avg: 0/0/2000000 buffer size: 4000000 vbv_delay: N/A

[out#0/mp4 @ 0x5cd43105b900] video:569284KiB audio:0KiB subtitle:0KiB other streams:0KiB global headers:0KiB muxing overhead: 0.056810%

frame=27000 fps=720 q=17.0 Lsize= 569607KiB time=00:04:59.96 bitrate=15555.8kbits/s speed= 8x

# while the above is running, you should see the process being run on the GPU

root@foobar:~# nvidia-smi

Sun Apr 20 08:45:04 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.133.20 Driver Version: 570.133.20 CUDA Version: 12.8 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA RTX A2000 On | 00000000:82:00.0 Off | Off |

| 30% 39C P0 40W / 70W | 162MiB / 6138MiB | 15% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 124937 C /usr/local/bin/ffmpeg 152MiB |

+-----------------------------------------------------------------------------------------+Yay! It’s working!

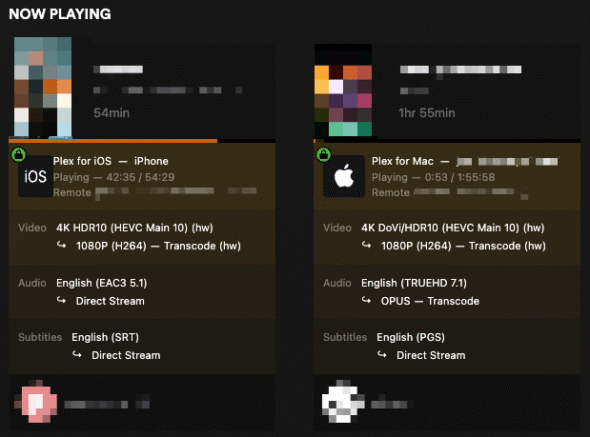

Let’s add the final pieces together for a fully working Plex docker-compose.yml:

services:

plex:

container_name: plex

hostname: plex

image: linuxserver/plex:latest

restart: unless-stopped

deploy:

resources:

reservations:

devices:

- capabilities: [gpu]

count: all

environment:

TZ: Europe/Paris

PUID: 0

PGID: 0

VERSION: latest

NVIDIA_VISIBLE_DEVICES: all

NVIDIA_DRIVER_CAPABILITIES: compute,video,utility

network_mode: host

volumes:

- /srv/config/plex:/config

- /storage/media:/data/media

- type: tmpfs

target: /tmp

tmpfs:

size: 8GAnd it’s working! Woho!

root@foobar:~# nvidia-smi

Sun Apr 20 11:08:07 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 570.133.20 Driver Version: 570.133.20 CUDA Version: 12.8 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA RTX A2000 On | 00000000:82:00.0 Off | Off |

| 30% 52C P0 40W / 70W | 1078MiB / 6138MiB | 9% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 272015 C ...exmediaserver/Plex Transcoder 516MiB |

| 0 N/A N/A 272569 C ...exmediaserver/Plex Transcoder 546MiB |

+-----------------------------------------------------------------------------------------+Main differences from my original v1 guide

I’ll quickly try to explain the main differences between this and my original v1 guide:

- We use the Debian packages provided by NVIDIA, rather than manually installed drivers. This is the recommended setup from NVIDIA. The downside is that you can’t as-easily cherry-pick a specific driver version, and you won’t always get the latest driver immediately (i.e. when I wrote this guide, driver version 570.144 was released three days ago, but only 570.133 was available via the NVIDIA repository). For our usecase (Plex transcoding) I think that’s perfectly reasonable.

- We don’t have to manually download & install drivers. This will be automatically updated on host+LXC container when doing

apt update+apt upgrade. Yes, we need to upgrade on both the host and within the LXC container at the same time, but that’s completely manageable (and was required even when installing manually anyways). When I wrote my previous guide I could not get this setup working when using distro Debian-packages inside the LXC container (due to multiple errors). By using the packages provided by NVIDIA (rather than from the distro), this now seems to work. - We don’t have to manually fiddle with kernel modules, blocklists, udev-rules, nvidia-persistenced.service, etc. All of these things are handled by the packages automatically. Well, maybe with the exception of a few modifications within the LXC container, but still less than before.

- Use

tmpfsfor transcodes to make them quicker/snappier. This can of course also be implemented in the v1 setup. - I’m pretty sure I would get my previous setup working if I had tested the

count: allfix, but ultimately I’m glad that I changed the setup/aproach, as it’s simpler/easier to upgrade in the future. If you prefer to have full manual control, you could probably use my v1 guide still.

Upgrading

Upgrading should be a simple apt update followed by apt full-upgrade on both the Proxmox host and within the LXC container. After the upgrade has been completed on the host, we also need to re-install it after the headers have been downloaded.

Some changes are required if you upgrade from PVE8 (Debian 12/Bookworm) to PVE9 (Debian 13/Trixie). See this section for more info.

Proxmox host

# upgrade packages

apt update

apt full-upgrade

# reboot

# cleanup old packages/headers

apt autoremove

# download new headers

apt install proxmox-headers-$(uname -r)

# reinstall the drivers with new headers installed

apt install --reinstall nvidia-driver-cuda nvidia-kernel-dkmsLXC container

# upgrade packages

apt update

apt full-upgrade

# update docker compose

curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

# update docker bash completion

curl \

-L https://raw.githubusercontent.com/docker/cli/master/contrib/completion/bash/docker \

-o /etc/bash_completion.d/docker-compose

# rebootCleanup from v1 of the guide

If you followed my previous v1 guide/setup, you should “clean up” before attempting to follow this updated guide. The steps below was what I needed to get a clean slate. They might not be suitable to your setup, so consider these as a reference, not a “copy-paste” list of commands.

LXC container

We clean up the LXC container first, since things might behave strangely if we remove stuff on the host first, as the LXC container might be dependent on things from the host.

# uninstall old manually installed driver

# select "no" when asked about xconfig-restore

./NVIDIA-Linux-x86_64-550.100.run --uninstall

# remove nvidia packages

# WARNING: if you *only* have nvidia-container* libnvidia-container* and nvidia-docker2

# you can probably skip this (and avoid having to re-install this)

apt remove --purge -y xserver-xorg-*

apt remove --purge -y glx-alternative-nvidia

apt remove --purge -y nvidia*

apt remove --purge -y cuda*

apt remove --purge -y cudnn*

apt remove --purge -y libnvidia*

apt remove --purge -y libcuda*

apt remove --purge -y libcudnn*

dpkg --list | awk '{print $2}' | grep -E "(xserver-xorg-|glx-alternative-nvidia|nvidia|cuda|cudnn|libnvidia|libcuda|libcudnn)" | xargs dpkg --purgeProxmox host

# remove nvidia kernel modules from /etc/modules-load.d/modules.conf

sed -i'' '/nvidia/d' /etc/modules-load.d/modules.conf

# remove kernel module blacklist of nouveau (as nvidia-packages deals with that)

rm /etc/modprobe.d/blacklist-nouveau.conf

# remove udev-rules

rm /etc/udev/rules.d/70-nvidia.rules

# uninstall nvidia persistence service

cp /usr/share/doc/NVIDIA_GLX-1.0/samples/nvidia-persistenced-init.tar.bz2 .

bunzip2 nvidia-persistenced-init.tar.bz2

tar -xf nvidia-persistenced-init.tar

chmod +x nvidia-persistenced-init/install.sh

./nvidia-persistenced-init/install.sh -r

rm -rf nvidia-persistenced-init*

# uninstall driver

./NVIDIA-Linux-x86_64-550.100.run --uninstall

# remove nvidia packages

apt remove --purge -y xserver-xorg-*

apt remove --purge -y glx-alternative-nvidia

apt remove --purge -y nvidia*

apt remove --purge -y cuda*

apt remove --purge -y cudnn*

apt remove --purge -y libnvidia*

apt remove --purge -y libcuda*

apt remove --purge -y libcudnn*

dpkg --list | awk '{print $2}' | grep -E "(xserver-xorg-|glx-alternative-nvidia|nvidia|cuda|cudnn|libnvidia|libcuda|libcudnn)" | xargs dpkg --purge

# update initramfs & reboot

update-initramfs -u

rebootProblems encountered

Any problems encountered during the setup will be included in separate sections below. Most of them will be updated/incorporated in the sections above.

You can also have a look at the list of problems from the v1 guide, as some of them might be relevant for this guide as well (or your setup).

1. Problems with Plex transcode using Linux kernel 6.8.x

The “new” install method would still not make Plex transcode.

It seems that there has been issues with Plex transcoding using 6.8.x kernel. See this forums.plex.tv discussion. For a while I thought this might be related, as upgrading to the latest Proxmox/Debian introduced 6.8.x kernel. However, these issues seems to be mostly solved by now.

There was specific mentions that Plex would expect /dev/dri devices being passed through (especially if you’ve enabled tone mapping in the Plex transcode settings), but I could not confirm if this was strictly needed when using NVIDIA GPUs or not. Since it’s required for certain other aspects, I decided to add it to be on the safe side. This would anyways be needed if you’re using an Intel or AMD GPU.

This did however not solve the issue. See the next section below for further troubleshooting.

2. Devices under /dev inside Docker not populated

After a lot of troubleshooting, I noticed that a Docker container with the parameter --gpus would work, but not if I ran the same command via Docker Compose. The same docker-compose.yml that I’ve had “forever” would no longer work:

services:

test:

image: tensorflow/tensorflow:latest-gpu

command: nvidia-smi

deploy:

resources:

reservations:

devices:

- capabilities: [gpu]Even running nvidia-smi would fail with the following error:

Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: exec: "nvidia-smi": executable file not found in $PATH: unknownLooking further into this, I noticed that the relevant NVIDIA devices would not populate under /dev/:

/dev:

total 512

drwxr-xr-x 6 root root 360 Apr 20 08:34 .

drwxr-xr-x 17 root root 2 Apr 20 08:34 ..

lrwxrwxrwx 1 root root 11 Apr 20 08:34 core -> /proc/kcore

drwxr-xr-x 2 root root 100 Apr 20 08:34 dri

lrwxrwxrwx 1 root root 13 Apr 20 08:34 fd -> /proc/self/fd

crw-rw-rw- 1 root root 1, 7 Apr 20 08:34 full

drwxrwxrwt 2 root root 40 Apr 20 08:34 mqueue

crw-rw-rw- 1 root root 1, 3 Apr 20 08:34 null

lrwxrwxrwx 1 root root 8 Apr 20 08:34 ptmx -> pts/ptmx

drwxr-xr-x 2 root root 0 Apr 20 08:34 pts

crw-rw-rw- 1 root root 1, 8 Apr 20 08:34 random

lrwxrwxrwx 1 root root 15 Apr 20 08:34 stderr -> /proc/self/fd/2

lrwxrwxrwx 1 root root 15 Apr 20 08:34 stdin -> /proc/self/fd/0

lrwxrwxrwx 1 root root 15 Apr 20 08:34 stdout -> /proc/self/fd/1

crw-rw-rw- 1 root root 5, 0 Apr 20 08:34 tty

crw-rw-rw- 1 root root 1, 9 Apr 20 08:34 urandom

crw-rw-rw- 1 root root 1, 5 Apr 20 08:34 zeroAfter looking into this some more, I found this discussion. It seems that there was/is a bug in Docker Compose. If you haven’t set the count: parameter in the Docker Compose file, it will not load NVIDIA Container Runtime, which in turn doesn’t populate the /dev/ folder with the appropriate files.

Rather than setting a specific driver version or count, we can simply include all GPUs by setting count: all, like this:

services:

test:

image: tensorflow/tensorflow:latest-gpu

command: nvidia-smi

deploy:

resources:

reservations:

devices:

- capabilities: [gpu]

count: all

root@docker1:~# docker compose up

[+] Running 1/1

✔ Container test-gpu-test-1 Recreated 0.1s

Attaching to test-1

test-1 | Sun Apr 20 08:48:47 2025

test-1 | +-----------------------------------------------------------------------------------------+

test-1 | | NVIDIA-SMI 570.133.20 Driver Version: 570.133.20 CUDA Version: 12.8 |

test-1 | |-----------------------------------------+------------------------+----------------------+

test-1 | | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

test-1 | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

test-1 | | | | MIG M. |

test-1 | |=========================================+========================+======================|

test-1 | | 0 NVIDIA RTX A2000 Off | 00000000:82:00.0 Off | Off |

test-1 | | 30% 33C P8 11W / 70W | 4MiB / 6138MiB | 0% Default |

test-1 | | | | N/A |

test-1 | +-----------------------------------------+------------------------+----------------------+

test-1 |

test-1 | +-----------------------------------------------------------------------------------------+

test-1 | | Processes: |

test-1 | | GPU GI CI PID Type Process name GPU Memory |

test-1 | | ID ID Usage |

test-1 | |=========================================================================================|

test-1 | | No running processes found |

test-1 | +-----------------------------------------------------------------------------------------+

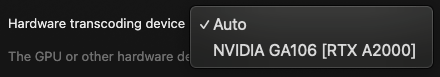

test-1 exited with code 0This, however, did not seem to solve the transcoding issue completely. It was a step in the right direction, as the GPU at least now showed up in the “Hardware transcoding device” list within Plex settings:

It did not solve the issues with Tensorflow, though. See the next section for more info regarding that.

3. Problems with the Tensorflow test

One of the tests that I used in my original guide was Tensorflow. After the initial upgrade where things stopped working, I couldn’t get the Tensorflow-test to work either. This is the same issue that James struggled with. He opted to install xorg/X/etc, but that’s not something I wantet to do. This was one of the factors that led me to “start from scratch” on the setup.

After solving the count: all issue described in the previous section above, my old Tensorflow test would still not work. however, there was a difference in output.

Output from before count: all fix:

2025-04-20 08:35:06.689498: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:467] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

WARNING: All log messages before absl::InitializeLog() is called are written to STDERR

E0000 00:00:1745138106.835511 1 cuda_dnn.cc:8579] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

E0000 00:00:1745138106.876932 1 cuda_blas.cc:1407] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

W0000 00:00:1745138107.147055 1 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once.

W0000 00:00:1745138107.147138 1 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once.

W0000 00:00:1745138107.147145 1 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once.

W0000 00:00:1745138107.147150 1 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once.

2025-04-20 08:35:07.179493: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2025-04-20 08:35:12.422576: E external/local_xla/xla/stream_executor/cuda/cuda_platform.cc:51] failed call to cuInit: INTERNAL: CUDA error: Failed call to cuInit: UNKNOWN ERROR (34)Output after fixing count: all:

2025-04-20 08:50:57.013493: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:467] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

WARNING: All log messages before absl::InitializeLog() is called are written to STDERR

E0000 00:00:1745139057.162458 1 cuda_dnn.cc:8579] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

E0000 00:00:1745139057.203735 1 cuda_blas.cc:1407] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

W0000 00:00:1745139057.483344 1 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once.

W0000 00:00:1745139057.483437 1 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once.

W0000 00:00:1745139057.483443 1 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once.

W0000 00:00:1745139057.483448 1 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once.

2025-04-20 08:50:57.517940: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

W0000 00:00:1745139063.945881 1 gpu_device.cc:2341] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform.

Skipping registering GPU devices...I still haven’t solved this issue, but since I don’t need Tensorflow to work, I have not spent anymore time trying to troubleshoot this any further. Maybe a reinstall of nvidia-container-toolkit would work, since NVIDIA recommends that you install it after you install the drivers (and we technically did that when converting from the old setup). If you still encounter this issue with a clean setup, then it’s something else…

4. Problems with environment variables

After still not getting things to work with the above fixes, I did some further troubleshooting. Amidst all the testing/troubleshooting, I had set the following two environmental variables in the Plex container (as there was several references online to these):

NVIDIA_VISIBLE_DEVICES: all

NVIDIA_DRIVER_CAPABILITIES: allIn my previous config, these were set slightly different:

NVIDIA_VISIBLE_DEVICES: all

NVIDIA_DRIVER_CAPABILITIES: compute,video,utilityChanging it back to the latter works (i.e. NVIDIA_DRIVER_CAPABILITIES: all will NOT work with Plex).

5. Use tmpfs/RAM for faster transcoding

In my previous setup I discovered that transcodes would be slow if the transcode directory was using fuse-overlayfs. I also discovered that even if you set a transcoder temporary directory in Plex settings, it’s not used for everything (i.e. the “Detecting intros” jobs would also transcode, and used /tmp). Both of these were fixed by mounting folders from the LXC container into the Docker container.

However, there’s an even better way to do this. If you have enough RAM, we can use a tmpfs mount, which is a temporary storage in memory/RAM. You can adjust the size of this in the docker-compose.yml file to something suitable for your available memory. There seems to be several people that has success using 8GB as the size, which seems to support multiple simultaneous transcodes (but you might have to increase it if you expect many simultaneous transcodes). If you don’t have a sufficient amount of memory/RAM available, this might not be the solution for you, and you should consider using the local storage method I had in my v1 guide.

By using tmpfs, the transcodes should be even faster/snappier, especially skipping within a movie/episode. The final docker-compose.yml for Plex takes both of these into account. You’ll have to set /tmp as the value for “Transcoder temporary directory” in the Plex settings. This way both transcodes and “Detecting intros” jobs would use the same path.

6. Upgrade to PVE9/Debian 13/Trixie

Upgrading from PVE8 (Debian 12/Bookworm) to PVE9 (Debian 13/Trixie) involves some changes to the NVIDIA driver repositories, including change of signing-key. Downloading the new cuda-keyring and re-installing this, and removing the old APT source file fixes this. The .deb version name is the same, but the content is different (yeah, its stupid, I know!). Do this on the host + inside the LXC container.

wget https://developer.download.nvidia.com/compute/cuda/repos/debian13/x86_64/cuda-keyring_1.1-1_all.deb

dpkg -i cuda-keyring_1.1-1_all.deb

rm /etc/apt/sources.list.d/cuda-debian12-x86_64.listOther than this, there should be no need to do any other changes (other than the normal Proxmox/Debian upgrade steps).

Old info regarding APT source lists:

# modify the apt repo url by adding "contrib non-free non-free-firmware"

# /etc/apt/sources.list should contain something like this

deb https://deb.debian.org/debian bookworm main contrib non-free non-free-firmware

deb https://deb.debian.org/debian bookworm-updates main contrib non-free non-free-firmware

deb https://security.debian.org/debian-security bookworm-security main contrib non-free non-free-firmware

# old keyring (for pve8/debian12/bookworm)

wget https://developer.download.nvidia.com/compute/cuda/repos/debian12/x86_64/cuda-keyring_1.1-1_all.deb

dpkg -i cuda-keyring_1.1-1_all.deb7. Driver pinning

If you want to use a specific driver version (i.e. if a certain version drops support for your GPU, like Sergio experienced), you can pin the driver version. This is not relevant for my use-case, so it’s not part of the main guide, but mentioned here after Sergio brought it up.

NVIDIA has a complete guide on how to achieve this, but the gist is to install certain packages before installing the drivers:

# after installing cuda-keyring, before installing drivers

# stick to 580.x

apt install nvidia-driver-pinning-580

# stick to 590.x

apt install nvidia-driver-pinning-590

# install drivers

apt install nvidia-driver-cuda nvidia-kernel-dkms8. Removing cgroups

Since PVE8.2 there is an easier way to passthrough the GPU (thanks, Sergio, for bringing this to my attention). The old ways of configuring this via cgroup + mount is outdated, and should not be used anymore. The new method is also much simpler. The guide has been updated to reflect this.

The old cgroup-settings below for reference:

# edit /etc/pve/lxc/101.conf and add the following

lxc.cgroup2.devices.allow: c 195:* rwm

lxc.cgroup2.devices.allow: c 226:* rwm

lxc.cgroup2.devices.allow: c 234:* rwm

lxc.cgroup2.devices.allow: c 237:* rwm

lxc.cgroup2.devices.allow: c 238:* rwm

lxc.cgroup2.devices.allow: c 239:* rwm

lxc.cgroup2.devices.allow: c 240:* rwm

lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file

lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-caps/nvidia-cap1 dev/nvidia-caps/nvidia-cap1 none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-caps/nvidia-cap2 dev/nvidia-caps/nvidia-cap2 none bind,optional,create=file

lxc.mount.entry: /dev/dri/card0 dev/dri/card0 none bind,optional,create=file

lxc.mount.entry: /dev/dri/card1 dev/dri/card1 none bind,optional,create=file

lxc.mount.entry: /dev/dri/renderD128 dev/dri/renderD128 none bind,optional,create=file9. Devices not present before running nvidia-smi

After upgrading to Proxmox 9.x (Debian 13 Trixie) and/or driver versions, it seems like the NVIDIA persistence service isn’t working properly — some of the /dev/ entries are not created (mainly /dev/nvidia-caps/* and /dev/nvidia-uvm*). Also, after changing from cgroup2.devices + lxc.mount.entry to the new devN: method, the LXC-container will not start if not all the devN: entries are present. This was not a problem with the lxc.mount.entry method, as those fail gracefully if the devices are not present. The combination of these two issues will cause the LXC container to not start after a reboot of the Proxmox host.

In an attempt to solve this, I started looking into running nvidia-smi before the LXC container is automatically started after a reboot. I looked at lxc.hook.pre-start, and also the pre-start phase of the LXC hookscript, but they did not work (i.e. didn’t trigger before the logic that checks the devN entries).

# tried to add into /etc/pve/lxc/101.conf

# triggers too late (i.e. LXC complains about missing devices before this is run)

lxc.hook.pre-start: /usr/bin/nvidia-smi

# set hookscript

pct set 101 -hookscript local:snippets/hookscript.pl

# example snippet /usr/share/pve-docs/examples/guest-example-hookscript.plAlso, at first it looked like the “on-demand” device entries weren’t strictly needed to be passed through. It seemed like these were created separately inside the LXC container when you run nvidia-smi.

Specifically /dev/nvidia-uvm, /dev/nvidia-caps/nvidia-cap1 and /dev/nvidia-caps/nvidia-cap2 was created, but not /dev/nvidia-uvm-tools, and ffmpeg would fail:

root@docker1:~# docker run --rm -it --gpus all --runtime=nvidia linuxserver/ffmpeg -hwaccel nvdec -f lavfi -i testsrc2=duration=300:size=1280x720:rate=90 -c:v hevc_nvenc -qp 18 nvidia-hevc_nvec-90fps-300s.mp4

[…]

[CUDA @ 0x5a459f4d9640] cu->cuInit(0) failed -> CUDA_ERROR_UNKNOWN: unknown error

Device creation failed: -542398533.

[vist#0:0/wrapped_avframe @ 0x5a459f4bb840] [dec:wrapped_avframe @ 0x5a459f4d8ac0] No device available for decoder: device type cuda needed for codec wrapped_avframe.

[vist#0:0/wrapped_avframe @ 0x5a459f4bb840] [dec:wrapped_avframe @ 0x5a459f4d8ac0] Hardware device setup failed for decoder: Generic error in an external library

Error opening output file nvidia-hevc_nvec-90fps-300s.mp4.

Error opening output files: Generic error in an external libraryMy solution: run some extra commands via systemd before LXC starts to ensure that all devices are present before the devN entries are attempted being mounted into the Docker LXC. This is a somewhat ugly workaround, and should be unnecessary since this is supposed to be the literal job of the nvidia-persistenced service (which is running, but not doing its job), but its better than the alternatives I’ve found so far.

cat >/etc/systemd/system/nvidia-pre-lxc-init.service <<'EOF'

[Unit]

Description=Initialize NVIDIA devices early (before Proxmox guests)

After=systemd-modules-load.service

Before=pve-guests.service

Wants=pve-guests.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStartPre=-/sbin/modprobe nvidia

ExecStartPre=-/sbin/modprobe nvidia_uvm

ExecStart=-/usr/bin/nvidia-smi -L

ExecStart=-/usr/bin/nvidia-modprobe -u -c=0

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable nvidia-pre-lxc-init.service

Tremendous updated guide – just in time for me nuking my entrie proxmox box and starting from scratch!

Thanks a million.

No problem, glad to be of help.

Hey and thanks for the guide, trying it right now :)

Feedback – This errors out because 1 url is wrong on apt update:

deb http://deb.debian.org/debian bookworm main contrib non-free non-free-firmware

deb http://deb.debian.org/debian bookworm-updates main contrib non-free non-free-firmware

deb http://deb.debian.org/debian bookworm-security main contrib non-free non-free-firmware

should be changed to:

deb http://deb.debian.org/debian bookworm main contrib non-free non-free-firmware

deb http://deb.debian.org/debian bookworm-updates main contrib non-free non-free-firmware

deb http://security.debian.org/debian-security bookworm-security main contrib non-free non-free-firmware

Debian doesnt provide the security updates under this url.

Good catch, thanks! Also noticed that I used http:// and not https:// as well. Updated the guide to reflect this.

This guide was exactly what I needed! I wish I had found this sooner too… Ended up wasting half a day stumbling thorough various YouTube videos when this spelled everything out so precisely. Many thanks internet stranger!

Glad to be of help!

This is only guide that worked for me. Thanks a lot

Only difference is, the permission are showing for me as following

crw-rw-rw- 1 nobody nogroup 195, 254 Jul 11 08:44 /dev/nvidia-modeset

crw-rw-rw- 1 nobody nogroup 509, 0 Jul 11 09:26 /dev/nvidia-uvm

crw-rw-rw- 1 nobody nogroup 509, 1 Jul 11 09:26 /dev/nvidia-uvm-tools

crw-rw-rw- 1 nobody nogroup 195, 0 Jul 11 08:44 /dev/nvidia0

crw-rw-rw- 1 nobody nogroup 195, 255 Jul 11 08:44 /dev/nvidiactl

/dev/dri:

total 0

drwxr-xr-x 2 root root 100 Jul 11 11:43 .

drwxr-xr-x 8 root root 620 Jul 11 11:43 ..

crw-rw—- 1 nobody nogroup 226, 0 Jul 11 08:44 card0

crw-rw—- 1 nobody nogroup 226, 1 Jul 11 08:44 card1

crw-rw—- 1 nobody nogroup 226, 128 Jul 11 08:44 renderD128

/dev/nvidia-caps:

total 0

drwxr-xr-x 2 root root 80 Jul 11 11:43 .

drwxr-xr-x 8 root root 620 Jul 11 11:43 ..

cr——– 1 nobody nogroup 236, 1 Jul 11 09:26 nvidia-cap1

cr–r–r– 1 nobody nogroup 236, 2 Jul 11 09:26 nvidia-cap2

Yes, the cgroup IDs is usually different from system to system. That is completely normal.

I took some of your scripts and turned them into one-liner shell script calls in this GitHub repo https://github.com/dmbeta/create-proxmox-nvidia-containers

I also added a few things of use to me, particularly:

– a script that parses your ls -alh /dev/nvidia command and generates a LXC mount config to append your conf with.

– setting up unattended-upgrades in the container while blacklisting automatic nvidia driver upgrades.

– some documentation on how to create a container template from all of this.

How do I buy you a beer? I’ve spent countless hours reading random tutorials and got nowhere. This solved it for me, thank you so much!

First of all, thanks for the guide. It was what managed to get things working for me in the first place.

I have a few suggestions based on problems I have encountered over time.

1. Driver pinning

Recently the proxmox kernel updated and so did nvidia drivers (version 590). To me, that dropped support for my GPU. Pinning the proxmox kernel would still call the newest drivers to be installed, so I had to pin the driver as well.

So my suggestion here would be to first check on nvidia website what the recommended driver is, then pin the driver (running apt install nvidia-driver-pinning-[version]) (as per https://docs.nvidia.com/datacenter/tesla/driver-installation-guide/version-locking.html) and only then proceed with installing nvidia-driver-cuda nvidia-kernel-dkms.

2. Removing Cgroups

I have the GPU working on an unprivileged LXC. Instead of playing around with cgroups, the easiest if to pass the device in the LXC config with UID and GID from the LXC user. That simplifies things a lot. For example:

dev0: /dev/nvidia0,gid=1000,uid=1000

dev1: /dev/nvidiactl,gid=1000,uid=1000

dev2: /dev/nvidia-modeset,gid=1000,uid=1000

dev3: /dev/nvidia-uvm,gid=1000,uid=1000

dev4: /dev/nvidia-uvm-tools,gid=1000,uid=1000

dev5: /dev/nvidia-caps/nvidia-cap1,gid=1000,uid=1000

dev6: /dev/nvidia-caps/nvidia-cap2,gid=1000,uid=1000

Hi,

Thanks for your comment.

I’ll make sure to make a note of driver pinning in the guide. It’s not relevant for my usecase, but I agree that it’s worth mentioning.

When it comes to the new way of doing device passthrough, that seems to have been introduced from PVE 8.2 and onwards, which technically was available on the version I made this updated guide with (8.4.1). However, I was not even aware that this was an option at that time (-:

I still have some questions surrounding this new mode, which I haven’t found any good answers to yet. Does it Just Work™ if you want to passthrough to multiple containers? Or does that require extra configuration/setup? What about other pre-requisites? Looking around, I see IOMMU mentioned as a potential requirement? I also find several threads with people having issues with this method… some of them might be configuration errors, though.

I’ll make a mental note of this, and try this method next time I’m upgrading or installing a new system.

For future reference; the guide has been updated to not use cgroups anymore (just like Sergio is mentioning), and I’ve also left a note of how to do version pinning for those that would need that. Thanks for the useful tips/feedback, Sergio!