I recently had to get GPU transcoding in Plex to work. The setup involved running Plex inside a Docker container, inside of an LXC container, running on top of Proxmox. I found some general guidelines online, but none that covered all aspects (especially dual layer of containerization/virtualization). I ran into a few challenges to get this working properly, so I’ll attempt to give a complete guide here.

Edit (2025-04-20): I’ve since created a new version of this guide (v2). I recommend that you use the new version. I will not be able to validate any aspects of the old guide, as I’m using the new setup. Also, this version (v1) will probably not get much updates due to this.

I’ll assume you’ve got Proxmox and LXC set up, ready to go, running Debian (specifically 11 Bullseye when this article was originally written, however it has since been validated to be working with 12 Bookworm as well). In my example I’ll be running LXC container named docker1 (ID 101) on my Proxmox host. Everything will be headless (i.e. no X involved). The LXC will be privileged with fuse=1,nesting=1 set as features. I’ll use a Nvidia RTX A2000 as the GPU. All commands will be run as root. Note that there might be other steps that needs to be done if you attempt to run this in a rootless/unprivileged LXC container (see here for more information).

The referenced commands in this guide can for the most part be copy-pasted. Some of the steps are interactive and/or requires you to do small changes on your own.

Proxmox host

First step is to install the drivers on the host. Nvidia has an official Debian repo, that we could use. However, that introduces a potential problem; we need to install the drivers on the LXC container later without kernel modules. I could not find a way to do this using the packages within the official Debian repo, and therefore had to install the drivers manually within the LXC container. The other aspect is that both the host and the LXC container need to run the same driver version (or else it won’t work). If we install using official Debian repo on the host, and manual driver install on the LXC container, we could easily end up with different versions (whenever you do an apt upgrade on the host). In order to have this as consistent as possible, we’ll install the driver manually on both the host and within the LXC container.

# we need to disable the Nouveau kernel module before we can install NVIDIA drivers

echo -e "blacklist nouveau\noptions nouveau modeset=0" > /etc/modprobe.d/blacklist-nouveau.conf

update-initramfs -u

reboot

# install packages required to build NVIDIA kernel drivers (only needed on host)

apt install build-essential

# install pve headers matching your current kernel

# older Proxmox versions might need to use "pve-headers-*" rather than "proxmox-headers-*"

apt install proxmox-headers-$(uname -r)

# download + install latest nvidia driver

# the below lines automatically downloads the most current driver

latest_driver=$(curl -s https://download.nvidia.com/XFree86/Linux-x86_64/latest.txt | awk '{print $2}')

latest_driver_file=$(echo ${latest_driver} | cut -d'/' -f2)

curl -O "https://download.nvidia.com/XFree86/Linux-x86_64/${latest_driver}"

chmod +x ${latest_driver_file}

./${latest_driver_file} --check

./${latest_driver_file}

# after driver 560+ (or so), you may be prompted to choose between two kernel variants

# one being the nvidia proprietary (the old default), and a new open kernel module

# for now i would choose the nvidia proprietary version

# answer "no" if it asks if you want to install 32bit compability drivers

# answer "no" if it asks if it should update X config

With the drivers installed, we need to add some udev-rules. This is to make sure proper kernel modules are loaded, and that all the relevant device files is created upon boot.

# add kernel modules echo -e '\n# load nvidia modules\nnvidia-drm\nnvidia-uvm' >> /etc/modules-load.d/modules.conf # add the following to /etc/udev/rules.d/70-nvidia.rules # will create relevant device files within /dev/ during boot KERNEL=="nvidia", RUN+="/bin/bash -c '/usr/bin/nvidia-smi -L && /bin/chmod 666 /dev/nvidia*'" KERNEL=="nvidia_uvm", RUN+="/bin/bash -c '/usr/bin/nvidia-modprobe -c0 -u && /bin/chmod 0666 /dev/nvidia-uvm*'" SUBSYSTEM=="module", ACTION=="add", DEVPATH=="/module/nvidia", RUN+="/usr/bin/nvidia-modprobe -m"

To avoid that the driver/kernel module is unloaded whenever the GPU is not used, we should run the Nvidia provided persistence service. It’s made available to us after the driver install.

# copy and extract cp /usr/share/doc/NVIDIA_GLX-1.0/samples/nvidia-persistenced-init.tar.bz2 . bunzip2 nvidia-persistenced-init.tar.bz2 tar -xf nvidia-persistenced-init.tar # remove old, if any (to avoid masked service) rm /etc/systemd/system/nvidia-persistenced.service # install chmod +x nvidia-persistenced-init/install.sh ./nvidia-persistenced-init/install.sh # check that it's ok systemctl status nvidia-persistenced.service rm -rf nvidia-persistenced-init*

If you’ve come so far without any errors, you’re ready to reboot the Proxmox host. After the reboot, you should see the following outputs (GPU type/info will of course change depending on your GPU);

root@foobar:~# nvidia-smi

Wed Feb 23 01:34:17 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 510.47.03 Driver Version: 510.47.03 CUDA Version: 11.6 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA RTX A2000 On | 00000000:82:00.0 Off | Off |

| 30% 36C P2 4W / 70W | 1MiB / 6138MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

root@foobar:~# systemctl status nvidia-persistenced.service

● nvidia-persistenced.service - NVIDIA Persistence Daemon

Loaded: loaded (/lib/systemd/system/nvidia-persistenced.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2022-02-23 00:18:04 CET; 1h 16min ago

Process: 9300 ExecStart=/usr/bin/nvidia-persistenced --user nvidia-persistenced (code=exited, status=0/SUCCESS)

Main PID: 9306 (nvidia-persiste)

Tasks: 1 (limit: 154511)

Memory: 512.0K

CPU: 1.309s

CGroup: /system.slice/nvidia-persistenced.service

└─9306 /usr/bin/nvidia-persistenced --user nvidia-persistenced

Feb 23 00:18:03 foobar systemd[1]: Starting NVIDIA Persistence Daemon...

Feb 23 00:18:03 foobar nvidia-persistenced[9306]: Started (9306)

Feb 23 00:18:04 foobar systemd[1]: Started NVIDIA Persistence Daemon.

root@foobar:~# ls -alh /dev/nvidia*

crw-rw-rw- 1 root root 195, 0 Jan 5 11:56 /dev/nvidia0

crw-rw-rw- 1 root root 195, 255 Jan 5 11:56 /dev/nvidiactl

crw-rw-rw- 1 root root 195, 254 Jan 5 11:56 /dev/nvidia-modeset

crw-rw-rw- 1 root root 237, 0 Jan 5 11:56 /dev/nvidia-uvm

crw-rw-rw- 1 root root 237, 1 Jan 5 11:56 /dev/nvidia-uvm-tools

/dev/nvidia-caps:

total 0

drw-rw-rw- 2 root root 80 Jan 5 11:56 .

drwxr-xr-x 19 root root 5.0K Jan 5 11:56 ..

cr-------- 1 root root 240, 1 Jan 5 11:56 nvidia-cap1

cr--r--r-- 1 root root 240, 2 Jan 5 11:56 nvidia-cap2

# the below are not needed for transcoding, but for other things like rendering

# or display applications like VirtualGL

root@foobar:~# ls -alh /dev/dri

total 0

drwxr-xr-x 3 root root 120 Jan 5 11:56 .

drwxr-xr-x 19 root root 5.0K Jan 5 11:56 ..

drwxr-xr-x 2 root root 100 Jan 5 11:56 by-path

crw-rw---- 1 root video 226, 0 Jan 5 11:56 card0

crw-rw---- 1 root video 226, 1 Jan 5 11:56 card1

crw-rw---- 1 root render 226, 128 Jan 5 11:56 renderD128

If the correct GPU shows from nvidia-smi, the persistence service runs fine, and you have at least five files under /dev/nvidia* are available, we’re ready to proceed to the LXC container.

The number of files depend on your setup; if you don’t have any /dev/nvidia-caps folder, you should be fine by adding only the five files listed above. If you also happen to have the /dev/nvidia-caps folder, you should add the two (or more) files within that as well. See here for more info.

Note that the files under /dev/dri are strictly not needed for transcoding, but would be needed for other things like rendering or display applications like VirtualGL.

LXC container

We need to add relevant LXC configuration to our container. Shut down the LXC container, and make the following changes to the LXC configuration file;

# edit /etc/pve/lxc/101.conf and add the following lxc.cgroup2.devices.allow: c 195:* rwm lxc.cgroup2.devices.allow: c 237:* rwm lxc.cgroup2.devices.allow: c 240:* rwm # if you want to use the card for other things than transcoding # add /dev/dri cgroup values as well lxc.cgroup2.devices.allow: c 226:* rwm # mount nvidia devices into LXC container lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file lxc.mount.entry: /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file lxc.mount.entry: /dev/nvidia-caps/nvidia-cap1 dev/nvidia-caps/nvidia-cap1 none bind,optional,create=file lxc.mount.entry: /dev/nvidia-caps/nvidia-cap2 dev/nvidia-caps/nvidia-cap2 none bind,optional,create=file # if you want to use the card for other things than transcoding # mount entries for files in /dev/dri should probably also be added

The numbers on the cgroup2-lines are from the fifth column in the device-lists above. Using the examples above, we would add 195, 237 and 240 as the cgroup-values. Also, in my setup the two nvidia-uvm files changes randomly between two values, while the three others remain static. I don’t know why they alternate between the different values (if you know how to make them static, please let me know), but LXC does not complain if you configure numbers that doesn’t exist (i.e. we can add all of them to make sure it works).

We can now turn on the LXC container, and we’ll be ready to install the Nvidia driver. This time we’re going to install it without the kernel drivers, and there is no need to install the kernel headers.

# the below lines automatically downloads the most current driver

latest_driver=$(curl -s https://download.nvidia.com/XFree86/Linux-x86_64/latest.txt | awk '{print $2}')

latest_driver_file=$(echo ${latest_driver} | cut -d'/' -f2)

curl -O "https://download.nvidia.com/XFree86/Linux-x86_64/${latest_driver}"

chmod +x ${latest_driver_file}

./${latest_driver_file} --check

./${latest_driver_file} --no-kernel-module

# after driver 560+ (or so), you may be prompted to choose between two kernel variants

# one being the nvidia proprietary (the old default), and a new open kernel module

# for now i would choose the nvidia proprietary version

# answer "no" if it asks if you want to install 32bit compability drivers

# answer "no" if it asks if it should update X config

At this point you should be able to reboot your LXC container. Verify that the files and driver works as expected, before moving on to the Docker setup.

root@docker1:~# ls -alh /dev/nvidia* crw-rw-rw- 1 root root 195, 0 Jan 5 11:56 /dev/nvidia0 crw-rw-rw- 1 root root 195, 255 Jan 5 11:56 /dev/nvidiactl crw-rw-rw- 1 root root 195, 254 Jan 5 11:56 /dev/nvidia-modeset crw-rw-rw- 1 root root 237, 0 Jan 5 11:56 /dev/nvidia-uvm crw-rw-rw- 1 root root 237, 1 Jan 5 11:56 /dev/nvidia-uvm-tools /dev/nvidia-caps: total 0 drwxr-xr-x 2 root root 80 Jan 5 15:22 . drwxr-xr-x 8 root root 640 Jan 5 15:22 .. cr-------- 1 root root 240, 1 Jan 5 15:22 nvidia-cap1 cr--r--r-- 1 root root 240, 2 Jan 5 15:22 nvidia-cap2 root@docker1:~# nvidia-smi Wed Feb 23 01:50:15 2022 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 510.47.03 Driver Version: 510.47.03 CUDA Version: 11.6 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 NVIDIA RTX A2000 Off | 00000000:82:00.0 Off | Off | | 30% 34C P8 10W / 70W | 3MiB / 6138MiB | 0% Default | | | | N/A | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

Docker container

Now we can move on to get the Docker working. We’ll be using docker-compose, and we’ll also make sure to have the latest version by removing the Debian-provided docker and docker-compose. We’ll also install the Nvidia-provided Docker runtime. Both these are relevant in terms of making the GPU available within Docker.

# remove debian-provided packages

apt remove docker-compose docker docker.io containerd runc

# install docker from official repository

apt update

apt install ca-certificates curl gnupg lsb-release

curl -fsSL https://download.docker.com/linux/debian/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/debian \

$(lsb_release -cs) stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

apt update

apt install docker-ce docker-ce-cli containerd.io

# install docker-compose

curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

# install docker-compose bash completion

curl \

-L https://raw.githubusercontent.com/docker/cli/master/contrib/completion/bash/docker \

-o /etc/bash_completion.d/docker-compose

# install NVIDIA Container Toolkit

apt install -y curl

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg

curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

apt update

apt install nvidia-container-toolkit

# restart systemd + docker (if you don't reload systemd, it might not work)

systemctl daemon-reload

systemctl restart docker

We should now be able to run Docker containers with GPU support. Let’s test it.

# nvidia/cuda doesn't support the "latest" tag. they also remove old releases,

# so we need to find the latest one. you can either run the oneliner below,

# or you can find the latest "base-ubuntu" tag manually on this page:

# https://hub.docker.com/r/nvidia/cuda/tags

root@docker1:~# latest_tag="`curl -s https://gitlab.com/nvidia/container-images/cuda/raw/master/doc/supported-tags.md | grep -i "base-ubuntu" | head -1 | perl -wple 's/.+\`(.+?)\`.+/$1/'`"

root@docker1:~# docker run --rm --gpus all nvidia/cuda:${latest_tag} nvidia-smi

Tue Feb 22 22:15:14 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 510.47.03 Driver Version: 510.47.03 CUDA Version: 11.6 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA RTX A2000 Off | 00000000:82:00.0 Off | Off |

| 30% 29C P8 4W / 70W | 1MiB / 6138MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

root@docker1:~# cat docker-compose.yml

services:

test:

image: tensorflow/tensorflow:latest-gpu

command: python -c "import tensorflow as tf;tf.test.gpu_device_name()"

deploy:

resources:

reservations:

devices:

- capabilities: [gpu]

count: all

root@docker1:~# docker-compose up

Starting test_test_1 ... done

Attaching to test_test_1

test_1 | 2022-02-22 22:49:00.691229: I tensorflow/core/platform/cpu_feature_guard.cc:151] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

test_1 | To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

test_1 | 2022-02-22 22:49:02.119628: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1525] Created device /device:GPU:0 with 4141 MB memory: -> device: 0, name: NVIDIA RTX A2000, pci bus id: 0000:82:00.0, compute capability: 8.6

test_test_1 exited with code 0

Yay! It’s working!

Keep in mind that I’ve experienced issues where tensorflow complains about the “kernel version not matching the DSO version” (please see more information here). If this happens to you, please try a different tensorflow-tag and/or different driver version (so that the kernel and DSO version matches).

Let’s add the final pieces together for a fully working Plex docker-compose.yml.

services:

plex:

container_name: plex

hostname: plex

image: linuxserver/plex:latest

restart: unless-stopped

deploy:

resources:

reservations:

devices:

- capabilities: [gpu]

count: all

environment:

TZ: Europe/Paris

PUID: 0

PGID: 0

VERSION: latest

NVIDIA_VISIBLE_DEVICES: all

NVIDIA_DRIVER_CAPABILITIES: compute,video,utility

network_mode: host

volumes:

- /srv/config/plex:/config

- /storage/media:/data/media

- /storage/temp/plex/transcode:/transcode

- /storage/temp/plex/tmp:/tmp

And it’s working! Woho!

If you have a consumer-grade GPU, you might also want to have a look at nvidia-patch, a toolkit that removes the restriction on maximum number of simultaneous NVENC video encoding session that is imposed by NVIDIA. Essentially this could potentially unlock more parallel transcodings that Plex can do.

Upgrading

Whenever you upgrade the kernel, you need to re-install the driver on the Proxmox host. If you want to run the same NVIDIA driver version, the process i simple; just re-run the original driver install. There should be no need to do anything in the LXC container (as the version stays the same, and no kernel modules are involved).

# answer "no" if it asks if you want to install 32bit compability drivers

# answer "no" if it asks if it should update X config

./NVIDIA-Linux-x86_64-510.47.03.run

rebootIf you want to upgrade the NVIDIA driver, there are a few extra steps. If you already have a working NVIDIA driver (i.e. you did not just update the kernel), you have to uninstall the old NVIDIA driver first (else it will complain that the kernel module is loaded, and it will instantly load the module again if you attempt to unload it).

# uninstall old driver to avoid kernel modules being loaded

# this step can be skipped if driver is broken after kernel update

./NVIDIA-Linux-x86_64-510.47.03.run --uninstall

reboot

# if you upgraded kernel, we need to download new headers

# older Proxmox versions might need to use "pve-headers-*" rather than "proxmox-headers-*"

apt install proxmox-headers-$(uname -r)

# install latest version

# (installer will ask to uninstall the old version if you could skip the manual uninstall)

# the below lines automatically downloads the most current driver

latest_driver=$(curl -s https://download.nvidia.com/XFree86/Linux-x86_64/latest.txt | awk '{print $2}')

latest_driver_file=$(echo ${latest_driver} | cut -d'/' -f2)

curl -O "https://download.nvidia.com/XFree86/Linux-x86_64/${latest_driver}"

chmod +x ${latest_driver_file}

./${latest_driver_file} --check

./${latest_driver_file}

# after driver 560+ (or so), you may be prompted to choose between two kernel variants

# one being the nvidia proprietary (the old default), and a new open kernel module

# for now i would choose the nvidia proprietary version

# answer "no" if it asks if you want to install 32bit compability drivers

# answer "no" if it asks if it should update X config

reboot

# new driver should now be installed and working

root@foobar:~# nvidia-smi

Sat Sep 3 06:04:04 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 535.146.02 Driver Version: 535.146.02 CUDA Version: 12.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA RTX A2000 On | 00000000:82:00.0 Off | Off |

| 30% 32C P8 4W / 70W | 1MiB / 6138MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+Please also check the cgroup-numbers if they have changed. I’ve experienced that they can change between distro upgrades (especially major versions, i.e. going from Debian 11 to Debian 12). If they have changed, update the LXC configuration file accordingly (see installation section of this guide).

We must now upgrade the driver in the LXC container, as they need to be the same version;

# download latest version

# the below lines automatically downloads the most current driver

latest_driver=$(curl -s https://download.nvidia.com/XFree86/Linux-x86_64/latest.txt | awk '{print $2}')

latest_driver_file=$(echo ${latest_driver} | cut -d'/' -f2)

curl -O "https://download.nvidia.com/XFree86/Linux-x86_64/${latest_driver}"

chmod +x ${latest_driver_file}

./${latest_driver_file} --check

./${latest_driver_file} --no-kernel-module

# after driver 560+ (or so), you may be prompted to choose between two kernel variants

# one being the nvidia proprietary (the old default), and a new open kernel module

# for now i would choose the nvidia proprietary version

# answer "no" if it asks if you want to install 32bit compability drivers

# answer "no" if it asks if it should update X config

root@docker1:~# nvidia-smi

Sat Sep 3 06:11:04 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 535.146.02 Driver Version: 535.146.02 CUDA Version: 12.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA RTX A2000 Off | 00000000:82:00.0 Off | Off |

| 30% 30C P8 4W / 70W | 1MiB / 6138MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

# update nvidia container toolkit repo + update

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg

curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

apt update

apt install nvidia-container-toolkit

apt upgradeBelow is a one-time upgrade/change if you have an old setup, due to changes in the NVIDIA Container Toolkit.

# update nvidia container toolkit repo + update

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg

curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

apt update

apt install nvidia-container-toolkit

apt upgradeReboot the LXC container, and things should work with the new driver.

Problems encountered

When trying to get everything working, I had a few challenges. The solutions have all been incorporated in the above sections, but I’ll briefly mention them for reference here.

1. nvidia-smi not working in Docker container

I got the error message Failed to initialize NVML: Unknown Error when running nvidia-smi within the Docker container. This turned out to be caused by cgroup2 superseeding cgroup on the host.

My initial workaround was to disable cgroup2, and revert back to cgroup. This can be done via updated GRUB parameter, like this;

# assuming EFI/UEFI # other commands for legacy BIOS echo "$(cat /etc/kernel/cmdline) systemd.unified_cgroup_hierarchy=false" > /etc/kernel/cmdline proxmox-boot-tool refresh

However, the proper fix would be to change the lxc.cgroup.devices.allow lines in the LXC config file, to lxc.cgroup2.devices.allow, which permanently resolves the issue.

2. docker-compose GPU config

The official documentation for docker-compose and Plex, states that GPU support is added via the parameter runtime. Running latest docker and docker-compose from stable Debian repository (Debian 11) could not use the runtime: nvidia parameter.

The newer method to consume GPU in docker-compose, the deploy parameter, is only supported on newer docker-compose (v1.28.0+), which is newer than what’s included in the stable Debian 11 repository. We need to use the latest versions in order to get this to work, where we would use the new deploy parameter.

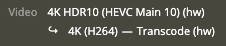

3. docker-compose GPU environment variables

GPU transcoding in Plex did not work with just the deploy parameter. It also needs the two environment variables in order to work. This was not clearly documented, and caused some frustration when trying to get everything working.

NVIDIA_VISIBLE_DEVICES: all NVIDIA_DRIVER_CAPABILITIES: compute,video,utility

4. High CPU usage from fuse-overlayfs

I also observed high CPU usage from fuse-overlayfs (which is the storage driver I’m using for Docker) caused by the Plex container. It turned out to be the “Detecting intros” background task, which transcodes the audio (to find the intros). It used /tmp as the transcode directory, which was part of the / mounted fuse-overlayfs. This happened despite having the transcode path set to /transcode (Settings -> Transcoder temporary directory). Normal transcoding seems to use /transcode, so it seems to only be the “Detecting intros” task that has this problem. Mounting this path caused the issue to go away.

5. Updating NVIDIA Container Toolkit

NVIDIA seems to update their repository URLs every other day, so it might be a good idea to update these URLs whenever upgrading the NVIDIA drivers. I’ve added relevant steps in the upgrade section above. Keep in mind that at some point the package was called nvidia-docker2, but is now called nvidia-container-toolkit. The package nvidia-docker2 now depends on nvidia-container-toolkit, so both would now be installed if you previously only had installed nvidia-docker2.

6. Running in rootless LXC

The above guide was done using a privileged LXC container. The user tony mentioned that he had issues getting his setup working using this guide, and referenced this link (which again referes to this link), where the solution seems to be to add something to the NVIDIA docker configuration file;

# add "no-cgroups = true" under the [nvidia-container-cli] section # in the file /etc/nvidia-container-runtime/config.toml # the configuration file should already have this setting commented out, # so you could change this by doing the following sed -i'' 's/^#no-cgroups = false/no-cgroups = true/;' /etc/nvidia-container-runtime/config.toml

Old NVIDIA documentation mentions that this change should only be done if you run your containers as a rootless/non-privileged user. If you run your containers as root/privileged user, you should not have to change this.

Setting no-cgroups = true in my setup actually breaks the setup, causing passthrough into Docker not working properly (probably because my setup is running in privileged mode);

root@e68599816a33:/# nvidia-smi Failed to initialize NVML: Unknown Error

Setting it back to disabled (default), it works again just fine.

7. Tensorflow kernel and DSO version mismatch

When attempting to run the tensorflow docker container to validate the GPU, you might get a message stating that the “kernel version does not match DSO version“;

E external/local_xla/xla/stream_executor/cuda/cuda_diagnostics.cc:244] kernel version 535.146.2 does not match DSO version 545.23.6 -- cannot find working devices in this configuration

It seems like the tensorflow containers are somewhat hard-coded to specific driver versions. There might be a way to specify what DSO version it should use, but I have not yet found a way to do that. I’ve either downgraded the tensorflow by using a specific tag (in my example above, changing from image: tensorflow/tensorflow:latest-gpu to image: tensorflow/tensorflow:2.14.0-gpu did the trick), or I’ve upgraded the NVIDIA driver to the same version as the DSO version tensorflow uses.

I tensorflow/compiler/xla/stream_executor/cuda/cuda_diagnostics.cc:309] kernel version seems to match DSO: 535.146.2

You could either experiment with changing tensorflow-version, or choose a matching NVIDIA driver version (i.e. in the above example, I could’ve choosen to use 545.23.6 as my NVIDIA driver version).

8. cgroup numbers could change

After upgrading my setup from Debian 11 to Debian 12, the cgroup numbers changed, causing the setup to break. Please keep this in mind, and update your LXC configuration file accordingly.

9. Number of device files varies

The number of device files that needs to be passed through to the LXC container seems to vary. I’m not entirely sure if this is caused by driver version or kernel version (or a combination), but there is no harm in adding all of them.

Observed on Debian 11 with kernel 5.x and NVIDIA driver 510.x;

root@foobar:~# ls -alh /dev/nvidia* crw-rw-rw- 1 root root 195, 0 Jan 5 11:56 /dev/nvidia0 crw-rw-rw- 1 root root 195, 255 Jan 5 11:56 /dev/nvidiactl crw-rw-rw- 1 root root 195, 254 Jan 5 11:56 /dev/nvidia-modeset crw-rw-rw- 1 root root 237, 0 Jan 5 11:56 /dev/nvidia-uvm crw-rw-rw- 1 root root 237, 1 Jan 5 11:56 /dev/nvidia-uvm-tools

Observed on Debian 12 with kernel 6.5.11 and NVIDIA driver 535.146.2;

root@foobar:~# ls -alh /dev/nvidia* crw-rw-rw- 1 root root 195, 0 Jan 5 11:56 /dev/nvidia0 crw-rw-rw- 1 root root 195, 255 Jan 5 11:56 /dev/nvidiactl crw-rw-rw- 1 root root 195, 254 Jan 5 11:56 /dev/nvidia-modeset crw-rw-rw- 1 root root 237, 0 Jan 5 11:56 /dev/nvidia-uvm crw-rw-rw- 1 root root 237, 1 Jan 5 11:56 /dev/nvidia-uvm-tools /dev/nvidia-caps: total 0 drw-rw-rw- 2 root root 80 Jan 5 11:56 . drwxr-xr-x 19 root root 5.0K Jan 5 11:56 .. cr-------- 1 root root 240, 1 Jan 5 11:56 nvidia-cap1 cr--r--r-- 1 root root 240, 2 Jan 5 11:56 nvidia-cap2

Adding only the five base files (i.e. excluding the ones inside /dev/nvidia-caps/), things seems to work in both scenarios (i.e. both in Debian 11 with only five files, and in Debian 12 with seven files). I observed that if I added LXC mountpoints only for these five files, then run tensorflow, the two files in /dev/nvidia-caps/ are suddenly added (even without any cgroup/mount-entries in the LXC configuration file). I have therefore modified the guide to also add these two additional files to be on the safe side;

root@docker1:~# ls -alh /dev/nvidia*

crw-rw-rw- 1 root root 195, 0 Jan 5 11:56 /dev/nvidia0

crw-rw-rw- 1 root root 195, 255 Jan 5 11:56 /dev/nvidiactl

crw-rw-rw- 1 root root 195, 254 Jan 5 11:56 /dev/nvidia-modeset

crw-rw-rw- 1 root root 237, 0 Jan 5 11:56 /dev/nvidia-uvm

crw-rw-rw- 1 root root 237, 1 Jan 5 11:56 /dev/nvidia-uvm-tools

root@docker1:~# cat docker-compose.yml

services:

test:

image: tensorflow/tensorflow:2.14.0-gpu

command: python -c "import tensorflow as tf;tf.test.gpu_device_name()"

deploy:

resources:

reservations:

devices:

- capabilities: [gpu]

root@docker1:~# docker-compose up

[+] Running 1/0

✔ Container test-plex-test-1 Created

Attaching to test-1

test-1 | 2024-01-05 14:23:31.775405: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

test-1 | 2024-01-05 14:23:31.775505: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

test-1 | 2024-01-05 14:23:31.777114: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

test-1 | 2024-01-05 14:23:31.954796: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

test-1 | To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

test-1 | 2024-01-05 14:23:37.830799: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1886] Created device /device:GPU:0 with 4294 MB memory: -> device: 0, name: NVIDIA RTX A2000, pci bus id: 0000:82:00.0, compute capability: 8.6

test-1 exited with code 0

root@docker1:~# ls -alh /dev/nvidia*

crw-rw-rw- 1 root root 195, 0 Jan 5 11:56 /dev/nvidia0

crw-rw-rw- 1 root root 195, 255 Jan 5 11:56 /dev/nvidiactl

crw-rw-rw- 1 root root 195, 254 Jan 5 11:56 /dev/nvidia-modeset

crw-rw-rw- 1 root root 237, 0 Jan 5 11:56 /dev/nvidia-uvm

crw-rw-rw- 1 root root 237, 1 Jan 5 11:56 /dev/nvidia-uvm-tools

/dev/nvidia-caps:

total 0

drwxr-xr-x 2 root root 80 Jan 5 15:22 .

drwxr-xr-x 8 root root 640 Jan 5 15:22 ..

cr-------- 1 root root 240, 1 Jan 5 15:22 nvidia-cap1

cr--r--r-- 1 root root 240, 2 Jan 5 15:22 nvidia-cap2

You also have some other devices used for non-transcoding purposes (like rendering or display applications like VirtualGL), which you also might need to add cgroup/mount-configuration for;

root@foobar:~# ls -alh /dev/dri total 0 drwxr-xr-x 3 root root 120 Jan 5 11:56 . drwxr-xr-x 19 root root 5.0K Jan 5 11:56 .. drwxr-xr-x 2 root root 100 Jan 5 11:56 by-path crw-rw---- 1 root video 226, 0 Jan 5 11:56 card0 crw-rw---- 1 root video 226, 1 Jan 5 11:56 card1 crw-rw---- 1 root render 226, 128 Jan 5 11:56 renderD128

10. Required packages

It seems like thebuild-essential meta-package might be required to compile the NVIDIA driver on the host. Also, newer Proxmox versions use proxmox-headers-* as the new name syntax for the header packages (compared to pve-headers-*, which was the old syntax).

11. Different kernel module types

After driver version 560+, NVIDIA provides two kernel module types. One being “NVIDIA Proprietary”, which is the kernel module that’s been the default until now. The other kernel module is “MIT/GPL”, which is an open kernel module. During driver installation it might prompt you to choose by asking “Multiple kernel module types are available for this system. Which would you like to use?“. The open kernel module (“MIT/GPL”) might work fine, but for now I’ve opted to continue using the NVIDIA proprietary module. The guide has been updated to reflect this.

12. Devices under /dev inside Docker not populated

Problem discovered during creation of v2 of my guide. Please see more info there. I’ve incorporated this fix in this guide as well, as it would probably have fixed the original issue (that caused me to create v2 in the first place).

excellent guide thank you!

Hi, thanks for the great guide. I followed the guide down to a tee with the only difference being I use an unprivileged container. I suspect this is the reason why I am getting the following error when trying to spin up the plex docker container:

“nvidia-container-cli: mount error: failed to add device rules: unable to find any existing device filters attached to the cgroup: bpf_prog_query(BPF_CGROUP_DEVICE) failed: operation not permitted: unknown”

Do you have any idea how to fix this? Thank you.

If I toogle c-groups=true in the nvidia container runtime config, it works, but it seems like a security issue. Source: https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#step-3-rootless-containers-setup

Correction: setting ‘no-cgroups = true’ allows it to work.

Glad to hear that the guide was helpful.

Setting

no-cgroups=trueallows it to work, but you would like to avoid setting this flag? Or did I misunderstand?Correct, sorry if I wasn’t clear. I would prefer to not have to set no-cgroups=true to force it to work. Purely because I am unsure of what the security implications are and just seems less robust overall if future driver upgrades end up overwriting the setting anyway.

This workaround only seems to be required for unprivileged containers, I do not need this if using privileged.

I do not know what

no-cgroups=truedoes “under the hood”, but it does not seem to be a security issue as far as I can see. There are multiple references and discussions regarding the parameter, and neither seems to raise a question regarding security.There seems to be alternative ways to make it work, but I don’t know if/how these could be translated to a LXC setup (as it requires changes to the kernel/boot);

https://github.com/NVIDIA/nvidia-docker/issues/1447#issuecomment-801479573

Hey, can’t seem to get hw transcoding to work reliably. In my case, I skipped the docker part and ran plex on the lxc itself. I tried privileged and unprivileged, with the 515.65.01 and 515.76 driver. I get nvidia-smi output the same as expected, and the five /dev/nvidia* files in the lxc. It only (mostly) works when choosing “Convert automatically” quality setting. This is with a 1660 super which should be plenty supported. Choosing a custom quality setting I get these errors in the plex logs:

[Req#3b7c/Transcode] Codecs: hardware transcoding: opening hw device failed – probably not supported by this system, error: Generic error in an external library

Sometimes if I wait long enough it will transcode. Any help is appreciated.

To rule out issues with the driver or Plex itself, I would attempt to do some other hardware offloading on the card. The two examples I have (using nvida-cuda and tensorflow) could probably be replicated “manually” without using docker. You could also attempt to hardware encode using FFmpeg or similar.

If these also fail, I would focus further troubleshooting on the driver/host. If they work, however, I would focus my troubleshooting on Plex.

This guide is amazing!!!!! it is the first full guide I found that had every step needed and corrections. THANK YOU!!!

This was incredibly useful @Joachim!

Thanks!

Your guide is still relevant today…and the only one which got my Plex LXC passthru

up and running correctly. A couple of things to help which might be useful when using the GPU for other things besides transcoding:

https://github.com/keylase/nvidia-patch/

Also use:

ls -alh /dev/nv*

ls -alh /dev/dri

to get all the Cgroups #s to pass into your LXC.

THANK YOU!!!!!!

What would

/dev/dribe for? I only added the cgroups from/dev/nvidia*, which has been sufficient for my setup for 1+ year through multiple driver updates. Maybe it’s card dependant? (i.e. varies between different cards). Did you actually have issues before adding /dev/dri, or did you never try without?/dev/dri is not required for transcoding but for direct rendering/display applications like thru VirtualGL.

Forgot to reply here, but thanks, information relating to

/dev/driwas added to the guide.Thank you for posting this guide. I used it this week and everything worked as expected.

I opted to install plex directly into the LXC rather than use Docker (mostly because the LXC is already containerizing it, and I didn’t see a need for a container inside a container).

Glad it could be of help! I used Docker since I already had a generic Docker LXC (for other apps and services), so it made sense to use Docker for Plex as well. And I did not want to run Docker directly on the host.

Hi There,

Love the guide, and I can get transcoding working after initial setup.

Following a Host power off and relocation the transcoding stops working and goes back to relying on the CPU.

I have double checked all the steps but every time the host is powered off, even after reinstalling, transcoding is listed in nvidia-smi but the CPU usage and performance of the stream is indicating that it is not transcoding using the card.

Any pointers?

Thanks,

Hi,

I can’t really say what the issue might be, no. The setup I use survives reboot/shutdown just fine (the same setup that is described in this guide).

issues solved, make sure you check your subtitle settings.

First, thank you for the clear instructions. I ran into a different problem. The driver installed and nvidia-smi executed correctly, but the docker test failed. It complained about cgroups, but not the issue you noted. If anyone needs it, here is a link to the fix on the forums. https://forum.proxmox.com/threads/docker-is-unable-to-access-gpu-in-lxc-gpu-passthrough.125066/. Very simple, just adjust the Nvidia config and you’re good to go.

Hi,

I assume this was in an unprivileged LXC container? If so, that might explain why you need to add it, but I didn’t (as I’m using a privileged LXC container).

Seems like it’s related to rootless/non-privileged vs. root/privileged. I have tested this in my setup, and it actually breaks it (as I’m running it as root/privileged). This is consistent with the NVIDIA documentation (where it explicitly states that you should not enable it in root/privileged mode).

I’ve updated my guide accordingly.

Thank you for such a good write up but seems to be broken in Proxmox 8.1.3. We need build-essentials and even then it fails.

Also installing the headers fails because the only pve-header-* are for the 6.2 kernel and the PVE 8.1.3 uses 6.5 and are only installable via proxmox-headers-* but I don’t think it’s pulling it all in properly.

I’m running this setup just fine on my Proxmox 8.1.3 server.

The headers are pulled via virtual packages (linking to the new names);

I notice that I have

build-essentialinstalled, but I’m not sure it’s needed? Or does the NVIDIA driver fail due to missing packages if it’s not installed?edit: Seems like

build-essentialmight be needed, yes. I’ve added both aspects to the guide.You saved my entire server. Thought my P600 was ewaste but this gave it new life. Thank you!!!!!

thank you very much for the guide, I have a problem, I’m stuck when I try to install the drivers in the container with the suffix –no-kernel-module, the extraction stops with the error “Signal caught, cleaning up”

hi,

guess you overcame that issue by the next comment, i’m facing same issue,

what have you dont to solve it?

thanks

root@docker:~# docker run –rm –gpus all nvidia/cuda:12.2-base nvidia-smi

Unable to find image ‘nvidia/cuda:12.2-base’ locally

docker: Error response from daemon: manifest for nvidia/cuda:12.2-base not found: manifest unknown: manifest unknown.

See ‘docker run –help’

This has been solved by fetching the latest tag dynamically via a oneliner. Guide has been updated accordingly.

This is a top tier guide on the process involved in getting passthrough to LXC Containers. I can get the nvidia-smi running within the LXC container now but i already have a fair few containers running so will have to investigate getting those backed up (portainer is the main one for managing several other containers). And i would not like losing them all.

I would also suggest including this url: https://download.nvidia.com/XFree86/Linux-x86_64/ as a reference point for getting the run files for users that see this late in the game and want to get the latest drivers.

Thanks, glad I could be of help.

Regarding “getting the latest driver“, I’ve been meaning to do something about that. Just updated the guide with logic to always fetch the latest available driver dynamically.

I can’t get the nvidia drivers to install, the error is as follows and i have yet to find a googleable solution – any idea?

[ 739.899684] NVRM: The NVIDIA GPU 0000:33:00.0

NVRM: (PCI ID: 10de:2786) installed in this system has

NVRM: fallen off the bus and is not responding to commands.

Have never encountered that problem, so can’t say for sure.

The search term “NVRM The NVIDIA GPU installed in this system has fallen off the bus and is not responding to commands” gives me a plethora of results with different problems and potential solutions. Seems like it could be anything from hardware, to BIOS, to driver, to NVIDIA Optimus, to third-party tool bbswitch, etc.

Keep getting this problem. Any help please?

Attaching to test-1

test-1 | 2024-09-28 17:15:54.301312: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:485] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

test-1 | 2024-09-28 17:15:54.311871: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:8454] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

test-1 | 2024-09-28 17:15:54.315007: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1452] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

test-1 | 2024-09-28 17:15:54.323010: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

test-1 | To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

test-1 | 2024-09-28 17:15:55.351920: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:266] failed call to cuInit: UNKNOWN ERROR (34)

test-1 exited with code 0

Managed to fix it, leaving this comment here for anyone experiencing the same issue:

# Proxmox version

pve-manager/8.2.7/3e0176e6bb2ade3b (running kernel: 6.8.12-2-pve)

#Lxc version

debian-12-standard_12.2-1_amd64.tar.zst

Followed the tutorial perfectly BUT needed the following pieces of software

apt update

apt install curl -y

apt install xorg -y

apt install libvulkan1 -y

apt install pkg-config -y

apt install libglvnd-dev -y

Without these, the nividia drivers reported errors at the time and would “install” correctly, allowing me to get the later information correctly and it would “look” like everyhting was working.

Without the above dependencies, the tensor flow test would fail and furthermore testing this in JellyFin (as i don’t want to pay for plex pro) for HW NEVC encoding failed during playback.

If you install the above dependencies, the nividia installer works correctly not causing:

test-1 | 2024-09-28 17:15:55.351920: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:266] failed call to cuInit: UNKNOWN ERROR (34)

I have done this in an priv container, but will be shortly trying this out on an unpriv one for security reasons.

———————-

Shout out to the host of this blog, thanks for the information dude, this writeup was great. It’s so important that we document these things, thanks for taking the time to do this =)

FYI, as of Dec 2024,

docker run –rm –gpus all nvidia/cuda:11.0-base nvidia-smi

no longer works as it was removed from the repo, need to run

docker run –rm –gpus all nvidia/cuda:11.0.3-base nvidia-smi

Thanks for the update. nvidia/cuda doesn’t have a “latest” tag, and they seem to remove old tags after a while. That doesn’t really pair well with a static guide that people “blindly” copy-pastes from (-:

I’ve made an attempt to solve this by fetching the latest tag via a oneliner. The guide has been updated accordingly. That might very well break in the future, but I guess that’s a problem for future me.

nvidia-smi works on host, and on LXC. But I cannot do the docker test. Keeps giving me:

docker: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error running prestart hook #0: exit status 1, stdout: , stderr: Auto-detected mode as ‘legacy’

nvidia-container-cli: mount error: failed to add device rules: unable to find any existing device filters attached to the cgroup: bpf_prog_query(BPF_CGROUP_DEVICE) failed: operation not permitted: unknown.

Proxmox VE: 8.3.3

LXC = Debian 12 (spun up using one of ttek scripts)

nvidia driver = 550.144.03